by Maya Gervits, Director of the Littman Architecture & Design Library, New Jersey Institute of Technology

Barbara Opar and Barret Havens, column editors

Librarians at many institutions are being asked to perform citation analysis, which is typically used to evaluate the merit of an individual publication or a body of work. Traditionally, citation analysis has been based on the assumption that if an article has been cited frequently and in a prestigious journal, then it is more likely to be of higher quality. However, over the last few years there have been a growing number of publications that have revealed the deficiencies of the commonly used tools and methods for citation analysis.

These publications argue that “citation data provides only a limited and incomplete view of research quality”[1] and that there is a general lack of understanding of “how different data sources and citation metrics might affect comparison between disciplines.”[2] Moreover, many of them suggest that with the existing system, we witness an “overemphasis of academics in the hard sciences rather than those in the social sciences and especially in the humanities.”[3] A. Zuccala in her article “Evaluating the Humanities: Vitalizing ‘the Forgotten Sciences,’” published in March 2013 in Research Trends echoes H. Moed who wrote that “the journal communication system in these disciplines does not reveal a core-periphery structure as pronounced as it is found to be in science.”[4] Zuccala confirms that in the Humanities (and this is true for art and design disciplines as well-M.G.) information is often disseminated using media other than journals, and that the humanities “demand a fairly wide range of quality indicators that will do justice to the diversity of products, target groups, and publishing cultures present in this field.”[5] Therefore, popular indexes in the sciences like Web of Knowledge or Scopus do not serve as well in determining citation value in art and design disciplines. Many journals, conferences and symposia materials, as well as books and book chapters in these fields are typically not listed at all. Even indexes specializing in the humanities, like the European Research Index for the Humanities (ERIH) or Arts & Humanities Citation Index, still cover only a limited number of titles focused specifically on architecture and design.

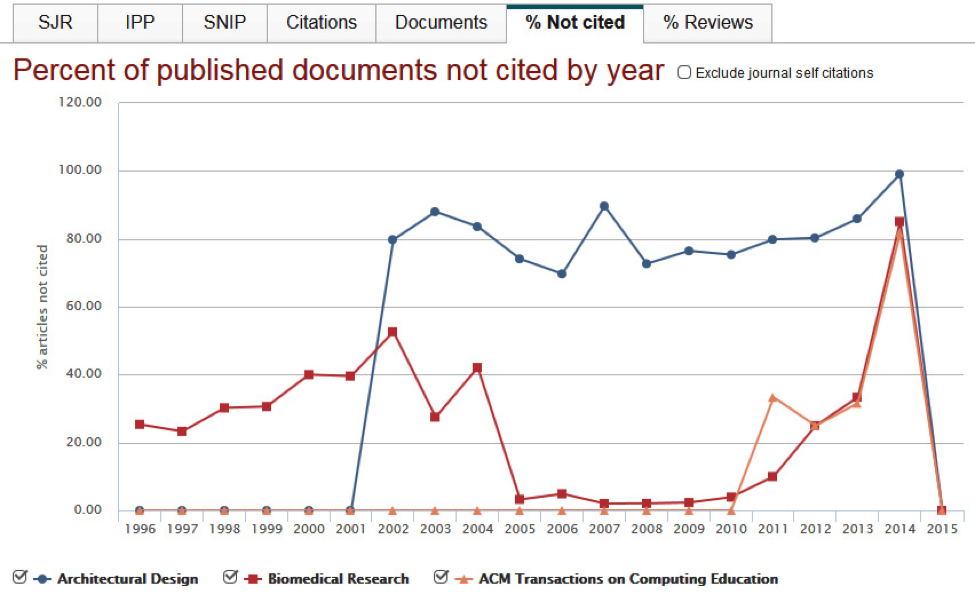

In addition to the aforementioned limitations, it is also important to acknowledge that citation patterns in STEM[6] disciplines are different than those in the arts and humanities. For example, as demonstrated on the chart below, it takes longer for a work in architecture to be cited than for a paper in biology or computer science.

Comparison of citation patterns.

Thomson Reuters, owner of the Web of Knowledge database, one of the most popular citation indexes, suggests that even in the fastest moving fields, such as molecular biology and genetics, it might take up to two years to accrue citations, whereas in physiology or analytical chemistry, “the time lag in citations might be on average three, four or even five years.”[7] In art and architecture it might take even longer as authors in these fields cite recently published documents less frequently than their colleagues in the hard sciences. Also, according to David Pendlebury (“Using Bibliometrics in Evaluating Research”), citation rates vary in different fields of research; an observation that has been confirmed by statistical data provided in “The Tyranny of Citations“ by P.G. Altbach. He states: “the sciences produce some 350,000 new cited references weekly, while the social sciences generate 50,000, and the humanities, 15,000.”[8] Pendlebury also noticed “The average ten-year-old paper in molecular biology and genetics may collect forty citations, whereas the average ten-year-old paper in a computer science journal may garner a relatively modest four citations.”[9] The article “How Much of Literature Goes Uncited?“ also reveals a wide gap between the citations even within non-STEM disciplines: 98 percent of arts and humanities papers remain uncited, versus 74.7 percent in the social sciences.[10]

So what can be done to overcome the limitations of traditional tools and data sources? Many researchers have turned their attention to Google Scholar which lately has increased in popularity and acceptance as a tool for identifying and analyzing citations. However, it lacks quality control and it is not comprehensive, as some scholarly journals, publications in languages other than English, or those more recent and forthcoming may be excluded. Book reviews and Google Books can help locate otherwise difficult to find citations in monographs and collections of essays, however their coverage is not consistent.

Scholars whose research is focused on areas related to computer-aided design can use CuminCad and ACM digital library (ACM DL) as sources of additional alternative data. The CuminCad database offers peers’ ratings while ACM DL tracks the number of downloads, which is the cumulative number of times a scholar’s work has been downloaded from the ACM full-text article server. Over the last few years, there has been an ongoing discussion of the correlation between downloads and citations. For example, the article “Comparing Citations and Downloads for Individual Articles at the Journal of Vision,” published in 2009, analyzes the number of unique downloads as a new measure of an article’s impact. It establishes a strong positive connection between downloads and citations suggesting, “substantial correlation, joined to the fact that downloads generally precede citations, would mean they provide a useful early predictor of eventual citations.”[11]

Another commonly used measure for scholarship evaluation is an academic journal’s impact factor, which is traditionally used to determine the relative importance of a journal within its field. Journals with a higher impact are deemed to be more important that those with a lower impact. However, this method has also received some deserved criticism. The article “Ending the Tyranny of the Impact Factor” (Nature Cell Biology, 2013) highlights “limitations of journal impact factors” and bemoans “their misuse as a proxy for the quality of individual papers.”[12] It is also especially important to note that outside the U.S. the distinction between commercial and university publishers is not always clear, and that professional periodicals in architecture and design can be as valuable and prestigious as those published by university presses. AASL has compiled a list of core periodicals essential for the study of design disciplines in academia.

The analysis of statistical data provided by professional organizations and conferences, which often indicate the acceptance-rate of paper submissions, can offer some additional parameters for scholarship evaluation as well. The recently published “Who Reads Research Articles? An Altmetrics Analysis of Mendeley User Categories,”[13] suggests that “Mendeley[14] statistics that record how many times an author’s work has been included in bibliography, can also reveal the hidden impact of some research papers.” The notion that in architecture, design and new media the sources of citations should be broadened to include not only print publications, but also a variety of digital resources available via the web has become more accepted.[15] Emerging alternative tools for citation analysis like Plum Analytics (plumanalytics.com) and Altmetric (altmetric.com) are attempting to include non-traditional indicators in the measurement of impact such as the amount of online attention garnered. For example, coverage in news outlets, blog posts, or tweets. However, these new tools are still focused mainly on fields other than architecture and design.

The Junior Faculty Handbook on Tenure and Promotion published by the Association of the Collegiate Schools of Architecture (ACSA) acknowledges that “All too often, discussions revolve around the number of articles or the quality of academic press while the real issue should be: how is the individual affecting and improving his or her field of expertise”?[16] Furthermore, The College Art Association (CAA) document Standards for Retention and Tenure of Art and Design Faculty confirms “an exhibition and/or peer-reviewed public presentation of creative work is to be regarded as analogous to publication in other fields.”[17] They also recommend that judgments of the quality of a candidate’s publication should be based on the assessment of expert reviewers who have read the work and can compare it to the state of scholarship in the field to which it contributes. The review of existing literature and practices suggests that there is a need to design a more holistic model for research assessment: a model that takes into consideration various measures of impact and multiple research outputs, especially for architecture and design. Such a model could then be adopted and used by various institutions as a guideline. The attention given to this issue suggests that perhaps it is time for a more broad discussion among representatives of different constituents such as faculty, administration, librarians and other researchers.

But until such time when a new model is accepted, faculty and librarians must rely on the tools that exist today and learn how to adapt them best to serve current needs. As AASL continues to highlight the challenges of tenure metrics in architecture and design-related disciplines, in this column next month my colleague, University at Buffalo Architecture and Planning Librarian Rose Orcutt, will discuss the range of currently available metric tools that may offer some additional solutions.

[1] Robert Adler, John Ewing and Peter Taylor Citation Statistics: a report from the International Mathematical Union (IMU) in cooperation with the International Council of Industrial and Applied Mathematics (ICIAM) and the Institute of Mathematical Statistics (IMS). In Statistical Science, vol.24,2009, n.1 at: http://arxiv.org/pdf/0910.3529.pdf

[2] Anne-Wil Harzing Citation analysis across disciplines: The impact of different data sources and citation metrics at http://www.harzing.com/data_metrics_comparison.htm

[3] Philip G.Altbach The Tyranny of Citations. Inside Hire Ed,2006 at https://www.insidehighered.com/views/2006/05/08/altbach

[4] The very specific nature of research in these disciplines is reflected in very specific output: the importance of monographs, chapters in monographs, exhibition catalogs, publications in various languages, and the inclusion of revised editions. Moed, Henk Citation Analysis in Research Evaluation. Springer, 2005

[5] http://www.researchtrends.com/issue-32-march-2013/evaluating-the-humanities-vitalizing-the-forgotten-sciences

[6] STEM is the acronym for science, technology, engineering and mathematics

[7] Pendlebury,D. Using Bibliometrics in Evaluating Research at: http://wokinfo.com/media/mtrp/UsingBibliometricsinEval_WP.pdf

[8] Altbach, Op.cit

[9] Pendlebury, Op.cit.

[10] http://scholarlykitchen.sspnet.org/2012/12/20/how-much-of-the-literature-goes-uncited/

[11] Watson.A. Comparing citations and downloads for individual articles at the Journal of Vision. Journal of Vision, April 2009, vol.9 at http://jov.arvojournals.org/article.aspx?articleid=2193506

[12] Ending the Tyranny of the Impact Factor. Nature Cell Biology, 16,1 (2014) at http://www.nature.com/ncb/journal/v16/n1/full/ncb2905.html

[13]https://www.academia.edu/6298635/Who_Reads_Research_Articles_An_Altmetrics_Analysis_of_Mendeley_User_Categories

[14] Mendeley –software for managing references, creating bibliography, scholarly collaboration, and research sharing.

[15] “New Criteria for New Media,” Leonardo, v.42, 2009

[16] https://www.acsa-arch.org/resources/faculty-resources/diversity-resources/handbooks/junior-faculty-handbook-on-tenure-and-promotion

[17] Standards for retention and tenure of Art and Design Faculty, revised October 2011 at http://www.collegeart.org/guidelines/tenure2

Study Architecture

Study Architecture  ProPEL

ProPEL